[ad_1]

Picture by Creator | Ideogram

ETL — that means Extract, Rework, Load — is a course of that strikes and prepares information for subsequent use, akin to information evaluation or machine studying modelling. ETL is a vital exercise for information scientists, because it allows us to amass the required information for our work.

To help the ETL course of, varied instruments can be found to facilitate our work, and one in every of them is DuckDB. DuckDB is an open-source OLAP SQL database administration system designed to deal with information analytics workloads with in-memory processing successfully. It’s a superb device for information scientists, whatever the measurement of the info being labored with.

Creating an information science ETL pipeline is essential for information scientists; it’s important to grasp the method completely. On this article, we are going to discover ways to create an ETL pipeline utilizing DuckDB.

Preparation

First, we are going to arrange all the required parts to simulate the ETL pipeline in a real-world information science mission. All of the code demonstrated on this article can be out there within the GitHub repository.

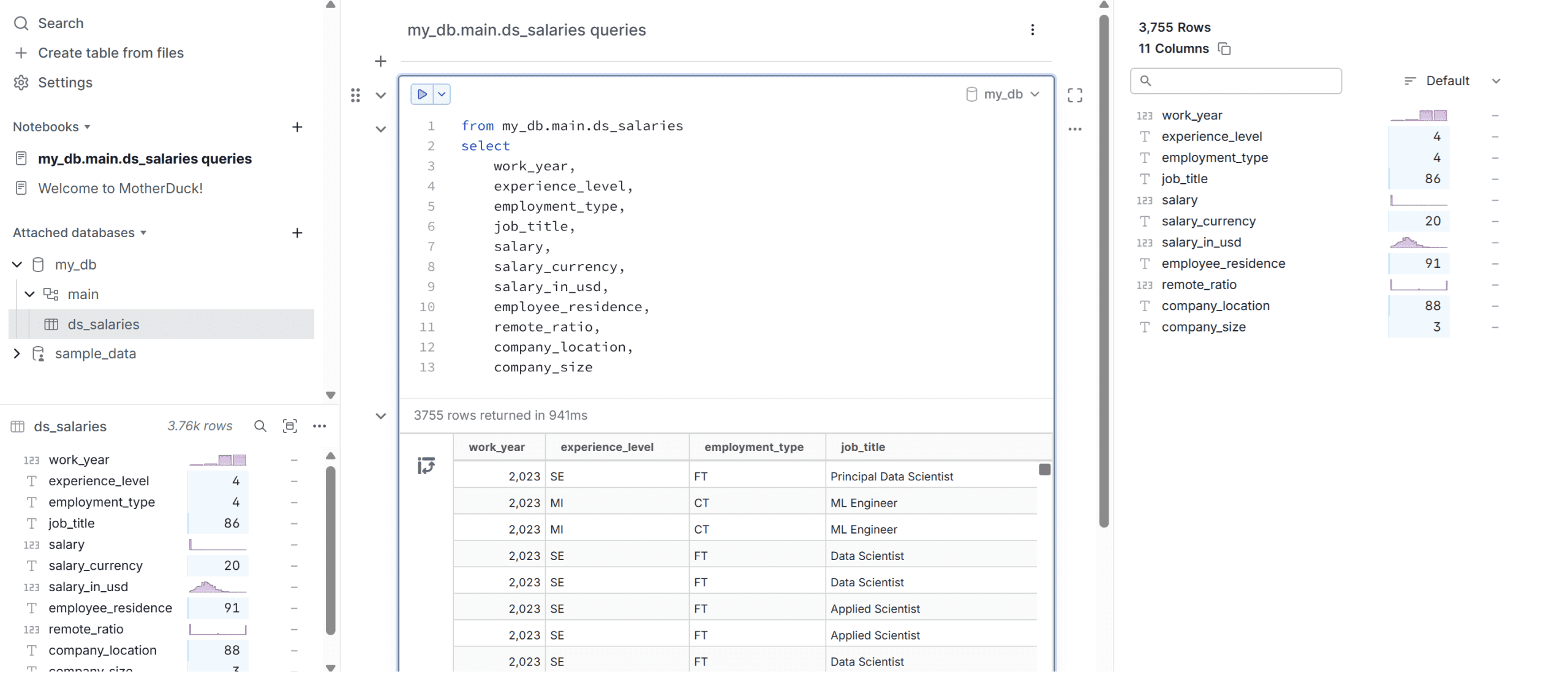

The very first thing we’d like would be the dataset for our article. On this instance, we are going to use the info scientist wage information from Kaggle. For the info warehouse, we are going to make the most of the DuckDB-powered cloud information warehouse, often called Motherduck. Register for a free account, then choose to create a desk from the recordsdata utilizing the info science wage information, and place them within the my_db database.

When you full this step, you may then question the dataset, and it will likely be displayed as proven within the picture beneath.

As soon as the database is prepared, purchase the entry tokens, which we are going to use to entry the cloud database.

Subsequent, open your IDE, akin to Visible Studio Code, to arrange the pipeline surroundings. Step one is to create the digital surroundings, which could be finished utilizing the next code.

python -m venv duckdb_venv

You possibly can change the digital surroundings title to any title you want. Activate the digital surroundings, and we are going to set up all of the required libraries. Create a textual content file referred to as necessities.txt and fill it with the next library names.

duckdb

pandas

pyarrow

python-dotenv

With the file prepared, we are going to set up the library required for the mission utilizing the code beneath.

pip set up -r necessities.txt

If each library is efficiently put in, we are going to arrange the surroundings variable utilizing the .env file. Create the file and insert the MOTHERDUCK_TOKEN inside, utilizing the token you simply acquired from Motherduck.

Now that the preparation is full, let’s proceed with establishing the ETL pipeline utilizing DuckDB.

ETL pipelines with DuckDB

Working with DuckDB is just like working with SQL operations, however with a lot easier connectivity. We’ll make the most of the DuckDB in-memory function to course of our information by operating queries within the Python surroundings however we are going to load the info again into the Motherduck cloud database.

First, create a Python file that can comprise the ETL pipeline. I made a file referred to as etl_duckdb.py, however you should utilize completely different names if you happen to choose.

Contained in the file, we are going to discover tips on how to arrange the info science ETL pipeline with DuckDB. Initially, we might want to join DuckDB to the cloud database to retrieve the required information.

import os

import duckdb

from dotenv import load_dotenv

load_dotenv()

MD_TOKEN = os.getenv("MOTHERDUCK_TOKEN")

con = duckdb.join(f'md:?motherduck_token={MD_TOKEN}')

After that, we are going to create a schema named analytics to retailer the info we extract.

con.sql("CREATE SCHEMA IF NOT EXISTS analytics;")

You possibly can see that the operations in DuckDB are precisely how you’ll use the SQL queries. In case you are already acquainted with SQL operations, then creating the pipeline will turn out to be a lot simpler.

Subsequent, we are going to extract the uncooked information into one other desk simply to indicate you that it’s attainable to make use of DuckDB for extracting and loading the identical information into one other desk.

con.sql("""

CREATE OR REPLACE TABLE raw_salaries AS

SELECT

work_year,

experience_level,

employment_type,

job_title,

wage,

salary_currency,

salary_in_usd,

employee_residence,

remote_ratio,

company_location,

company_size

FROM my_db.ds_salaries;

""")

With the info ready, we are able to carry out any transformations and cargo the modified information for subsequent evaluation.

For instance, let’s rework the info into common wage information primarily based on work 12 months and expertise stage, which we are going to load into the desk avg_salary_year_exp.

con.sql("""

CREATE OR REPLACE TABLE analytics.avg_salary_year_exp AS

SELECT

work_year,

experience_level,

ROUND(AVG(salary_in_usd), 2) AS avg_usd_salary

FROM raw_salaries

GROUP BY work_year, experience_level

ORDER BY work_year, experience_level;

""")

Let’s test the remodeled information that we have now loaded into the desk utilizing the next code.

con.sql("SELECT * FROM analytics.avg_salary_year_exp LIMIT 5").present()

The result’s a desk proven within the output beneath.

┌───────────┬──────────────────┬────────────────┐

│ work_year │ experience_level │ avg_usd_salary │

│ int64 │ varchar │ double │

├───────────┼──────────────────┼────────────────┤

│ 2020 │ EN │ 57511.61 │

│ 2020 │ EX │ 139944.33 │

│ 2020 │ MI │ 87564.72 │

│ 2020 │ SE │ 137240.5 │

│ 2021 │ EN │ 54905.25 │

└───────────┴──────────────────┴────────────────┘

Utilizing DuckDB, we are able to effectively carry out ETL with none problem.

As DuckDB is kind of versatile in serving to our operations, we are able to additionally make the most of Pandas to carry out ETL operations.

For instance, we are able to take the earlier common wage information and rework it right into a DataFrame object, the place we are able to rework it even additional.

df_avg = con.sql("SELECT * FROM analytics.avg_salary_year_exp").df()

df_avg["avg_salary_k"] = df_avg["avg_usd_salary"] / 1_000

We are able to see the results of the DataFrame we have now remodeled utilizing the code beneath.

The place the output is just like the one beneath.

work_year experience_level avg_usd_salary avg_salary_k

0 2020 EN 57511.61 57.51161

1 2020 EX 139944.33 139.94433

2 2020 MI 87564.72 87.56472

3 2020 SE 137240.50 137.24050

4 2021 EN 54905.25 54.90525

Utilizing the DataFrame above, we are able to register it in DuckDB, which is able to deal with the DataFrame as a desk utilizing the code beneath.

con.register("pandas_avg_salary", df_avg)

The Pandas DataFrame is now prepared for additional processing; for instance, we are able to rework the info and reload it into the cloud database.

con.sql("""

CREATE OR REPLACE TABLE analytics.avg_salary_year_exp_pandas AS

SELECT

work_year,

experience_level,

avg_salary_k

FROM pandas_avg_salary

WHERE avg_salary_k > 100

ORDER BY avg_salary_k DESC

""")

You possibly can see the consequence utilizing the code beneath.

con.sql("SELECT * FROM analytics.avg_salary_year_exp_pandas LIMIT 5").present()

The output is proven beneath.

┌───────────┬──────────────────┬──────────────┐

│ work_year │ experience_level │ avg_salary_k │

│ int64 │ varchar │ double │

├───────────┼──────────────────┼──────────────┤

│ 2023 │ EX │ 203.70568 │

│ 2022 │ EX │ 188.26029 │

│ 2021 │ EX │ 186.128 │

│ 2023 │ SE │ 159.56893 │

│ 2022 │ SE │ 147.65969 │

└───────────┴──────────────────┴──────────────┘

That’s all you could develop a easy ETL pipeline for an information science mission. You possibly can prolong the pipeline with automation and a scheduler utilizing a CRON job, relying on the mission necessities.

Conclusion

ETL, or Extract, Rework, Load, is a course of that strikes and prepares information for additional utilization. For an information scientist, ETL is beneficial for any work that requires information, akin to information evaluation or machine studying modelling.

On this article, we have now realized tips on how to create an ETL pipeline for information science work utilizing DuckDB. We demonstrated tips on how to extract information from a cloud database, remodeled it utilizing SQL queries and Pandas DataFrames, and loaded it again into the cloud database.

I hope this has helped!

Cornellius Yudha Wijaya is an information science assistant supervisor and information author. Whereas working full-time at Allianz Indonesia, he likes to share Python and information ideas through social media and writing media. Cornellius writes on quite a lot of AI and machine studying matters.

[ad_2]