Robert Triggs / Android Authority

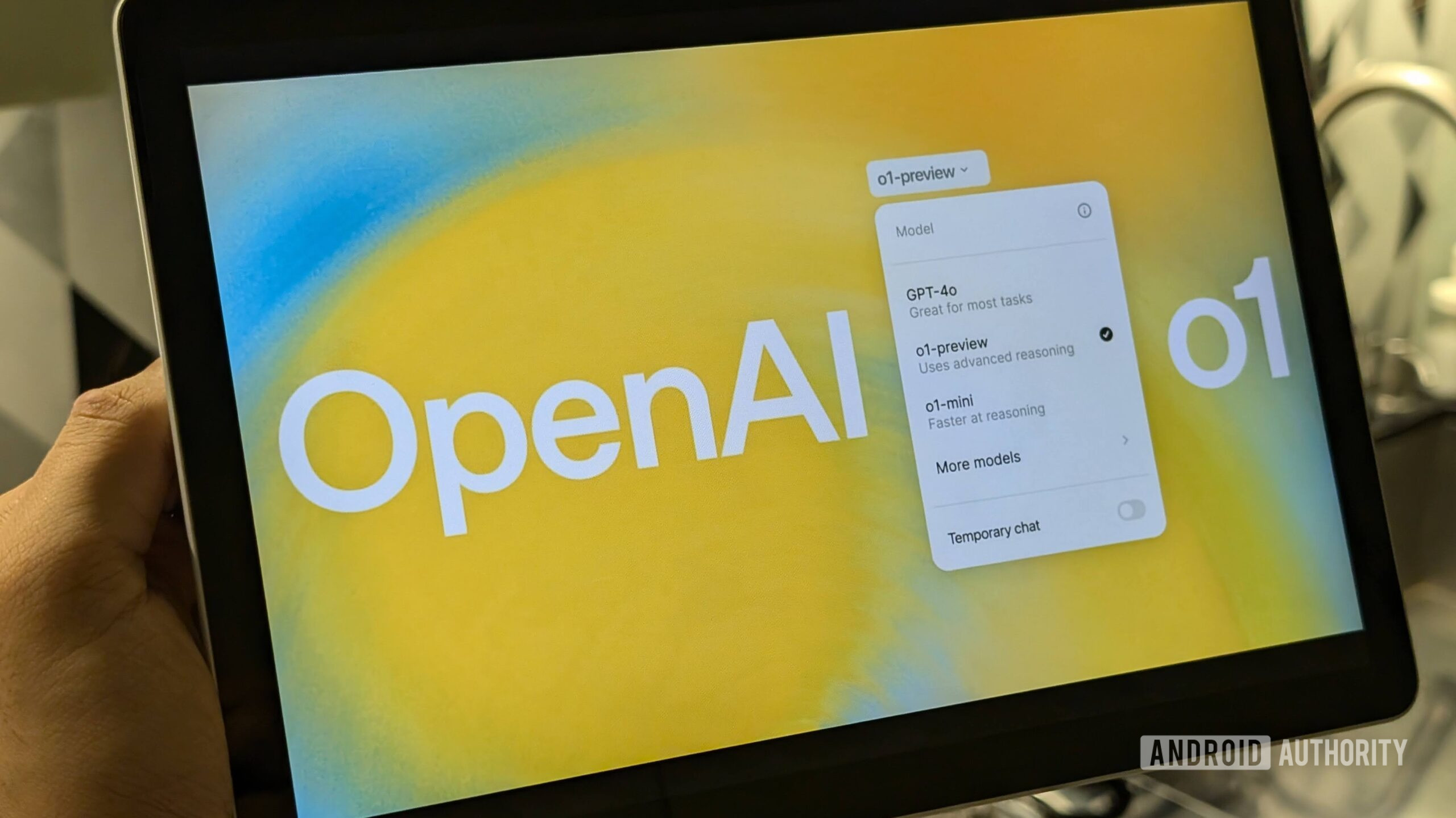

One other day, one other giant language mannequin, however information that OpenAI has launched its first open-weight fashions (gpt-oss) with Apache 2.0 licensing is an even bigger deal than most. Lastly, you’ll be able to run a model of ChatGPT offline and at no cost, giving builders and us informal AI lovers one other highly effective software to check out.

As common, OpenAI makes some fairly massive claims about gpt-oss’s capabilities. The mannequin can apparently outperform o4-mini and scores fairly near its o3 mannequin — OpenAI’s cost-efficient and strongest reasoning fashions, respectively. Nevertheless, that gpt-oss mannequin is available in at a colossal 120 billion parameters, requiring some severe computing equipment to run. For you and me, although, there’s nonetheless a extremely performant 20 billion parameter mannequin accessible.

Are you able to now run ChatGPT offline and at no cost? Properly, it relies upon.

In concept, the 20 billion parameter mannequin will run on a contemporary laptop computer or PC, offered you’ve gotten bountiful RAM and a strong CPU or GPU to crunch the numbers. Qualcomm even claims it’s enthusiastic about bringing gpt-oss to its compute platforms — suppose PC reasonably than cellular. Nonetheless, this does beg the query: Is it doable to now run ChatGPT fully offline and on-device, at no cost, on a laptop computer and even your smartphone? Properly, it’s doable, however I wouldn’t suggest it.

What do it’s good to run gpt-oss?

Edgar Cervantes / Android Authority

Regardless of shrinking gpt-oss from 120 billion to twenty billion parameters for extra common use, the official quantized mannequin nonetheless weighs in at a hefty 12.2GB. OpenAI specifies VRAM necessities of 16GB for the 20B mannequin and 80GB for the 120B mannequin. You want a machine able to holding the complete factor in reminiscence directly to attain cheap efficiency, which places you firmly into NVIDIA RTX 4080 territory for enough devoted GPU reminiscence — hardly one thing all of us have entry to.

For PCs with a smaller GPU VRAM, you’ll need 16GB of system RAM in the event you can break up a few of the mannequin into GPU reminiscence, and ideally a GPU able to crunching FP4 precision information. For the whole lot else, corresponding to typical laptops and smartphones, 16GB is de facto chopping it positive as you want room for the OS and apps too. Primarily based on my expertise, 24GB RAM is required; my seventh Gen Floor Laptop computer, full with a Snapdragon X processor and 16GB RAM, labored at an admittedly fairly first rate 10 tokens per second, however barely held on even with each different utility closed.

Regardless of it is smaller measurement, gpt-oss 20b nonetheless wants loads of RAM and a strong GPU to run easily.

In fact, with 24 GB RAM being best, the overwhelming majority of smartphones can not run it. Even AI leaders just like the Pixel 9 Professional XL and Galaxy S25 Extremely prime out at 16GB RAM, and never all of that’s accessible. Fortunately, my ROG Cellphone 9 Professional has a colossal 24GB of RAM — sufficient to get me began.

How one can run gpt-oss on a telephone

Robert Triggs / Android Authority

For my first try and run gpt-oss on my Android smartphone, I turned to the rising number of LLM apps that allow you to run offline fashions, together with PocketPal AI, LLaMA Chat, and LM Playground.

Nevertheless, these apps both didn’t have the mannequin accessible or couldn’t efficiently load the model downloaded manually, presumably as a result of they’re primarily based on an older model of llama.cpp. As a substitute, I booted up a Debian partition on the ROG and put in Ollama to deal with loading and interacting with gpt-oss. If you wish to comply with the steps, I did the identical with DeepSeek earlier within the 12 months. The disadvantage is that efficiency isn’t fairly native, and there’s no {hardware} acceleration, that means you’re reliant on the telephone’s CPU to do the heavy lifting.

So, how properly does gpt-oss run on a top-tier Android smartphone? Barely is the beneficiant phrase I’d use. The ROG’s Snapdragon 8 Elite is perhaps highly effective, but it surely’s nowhere close to my laptop computer’s Snapdragon X, not to mention a devoted GPU for information crunching.

gpt-oss can nearly run on a telephone, but it surely’s barely usable.

The token price (the speed at which textual content is generated on display screen) is barely satisfactory and definitely slower than I can learn. I’d estimate it’s within the area of 2-3 tokens (a few phrase or so) per second. It’s not fully horrible for brief requests, but it surely’s agonising if you wish to do something extra advanced than say hiya. Sadly, the token price solely will get worse as the scale of your dialog will increase, ultimately taking a number of minutes to provide even a few paragraphs.

Robert Triggs / Android Authority

Clearly, cellular CPUs actually aren’t constructed for one of these work, and definitely not fashions approaching this measurement. The ROG is a nippy performer for my each day workloads, but it surely was maxed out right here, inflicting seven of the eight CPU cores to run at 100% nearly continually, leading to a reasonably uncomfortably sizzling handset after only a few minutes of chat. Clock speeds rapidly throttled, inflicting token speeds to fall additional. It’s not nice.

With the mannequin loaded, the telephone’s 24GB was stretched as properly, with the OS, background apps, and extra reminiscence required for the immediate and responses all vying for area. After I wanted to flick out and in of apps, I might, however this introduced already sluggish token era to a digital standstill.

One other spectacular mannequin, however not for telephones

Calvin Wankhede / Android Authority

Working gpt-oss in your smartphone is just about out of the query, even you probably have an enormous pool of RAM to load it up. Exterior fashions aimed primarily on the developer group don’t assist cellular NPUs and GPUs. The one approach round that impediment is for builders to leverage proprietary SDKs like Qualcomm’s AI SDK or Apple’s Core ML, which gained’t occur for this form of use case.

Nonetheless, I used to be decided not to surrender and tried gpt-oss on my ageing PC, geared up with a GTX1070 and 24GB RAM. The outcomes have been positively higher, at round 4 to 5 tokens per second, however nonetheless slower than my Snapdragon X laptop computer working simply on the CPU — yikes.

In each circumstances, the 20b parameter model of gpt-oss actually appears spectacular (after ready some time), because of its configurable chain of reasoning that lets the mannequin “suppose” for longer to assist clear up extra advanced issues. In comparison with free choices like Google’s Gemini 2.5 Flash, gpt-oss is the extra succesful downside solver because of its use of chain-of-thought, very similar to DeepSeek R1, which is all of the extra spectacular given it’s free. Nevertheless, it’s nonetheless not as highly effective because the mightier and costlier cloud-based fashions — and definitely doesn’t run anyplace close to as quick on any client devices I personal.

Nonetheless, superior reasoning within the palm of your hand, with out the fee, safety issues, or community compromises of right this moment’s subscription fashions, is the AI future I believe laptops and smartphones ought to really purpose for. There’s clearly an extended option to go, particularly on the subject of mainstream {hardware} acceleration, however as fashions turn out to be each smarter and smaller, that future feels more and more tangible.

A couple of of my flagship smartphones have confirmed fairly adept at working smaller 8 billion parameter fashions like Qwen 2.5 and Llama 3, with surprisingly fast and highly effective outcomes. If we ever see a equally speedy model of gpt-oss, I’d be way more excited.

Thanks for being a part of our group. Learn our Remark Coverage earlier than posting.