By Petros Koutoupis, VDURA

With all the excitement round synthetic intelligence and machine studying, it’s straightforward to lose sight of which high-performance computing storage necessities are important to ship actual, transformative worth in your group.

When evaluating a knowledge storage answer, probably the most widespread efficiency metrics is enter/output operations per second (IOPS). It has lengthy been the usual for measuring storage efficiency, and relying on the workload, a system’s IOPS may be essential.

In observe, when a vendor advertises IOPS, they’re actually showcasing what number of discontiguous 4 KiB reads or writes the system can deal with below the worst-case state of affairs of absolutely random I/O. Measuring storage efficiency by IOPS is simply significant if the workloads are IOPS-intensive (e.g., databases, virtualized environments, or internet servers). However as we transfer into the period of AI, the query stays: does IOPS nonetheless matter?

A Breakdown of your Customary AI Workload

AI workloads run throughout your entire information lifecycle, and every stage places its personal spin on GPU compute (with CPUs supporting orchestration and preprocessing), storage, and information administration sources. Listed here are among the most typical sorts you’ll come throughout when constructing and rolling out AI options.

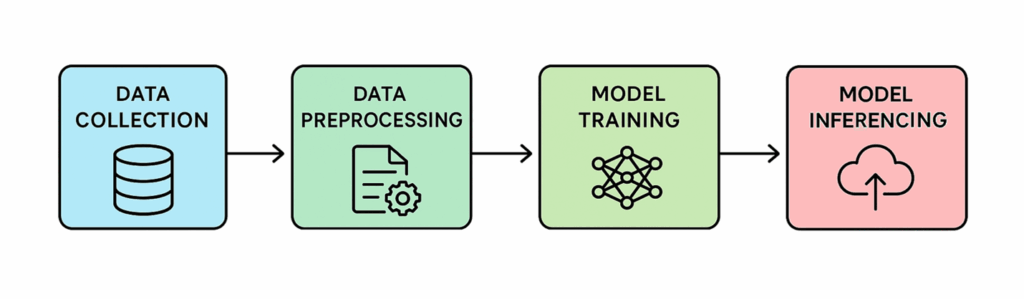

AI workflows (supply: VDURA)

Information Ingestion & Preprocessing

Throughout this stage, uncooked information is collected from sources comparable to databases, social media platforms, IoT gadgets, and APIs (as examples), then fed into AI pipelines to organize it for evaluation. Earlier than that evaluation can occur, nonetheless, the information have to be cleaned, eradicating inconsistencies, corrupt or irrelevant entries, filling in lacking values, and aligning codecs (such

as timestamps or items of measurement), amongst different duties.

Mannequin Coaching

After the information is prepped, it’s time for essentially the most demanding section: coaching. Right here, giant language fashions (LLMs) are constructed by processing information to identify patterns and relationships that drive correct predictions. This stage leans closely on high-performance GPUs, with frequent checkpoints to storage so coaching can rapidly get better from {hardware} or job failures. In lots of instances, some extent of fine-tuning or related changes might also be a part of the method.

Advantageous-Tuning

Mannequin coaching usually includes constructing a basis mannequin from scratch on giant datasets to seize broad, normal information. Advantageous-tuning then refines this pre-trained mannequin for a particular job or area utilizing smaller, specialised datasets, enhancing its efficiency.

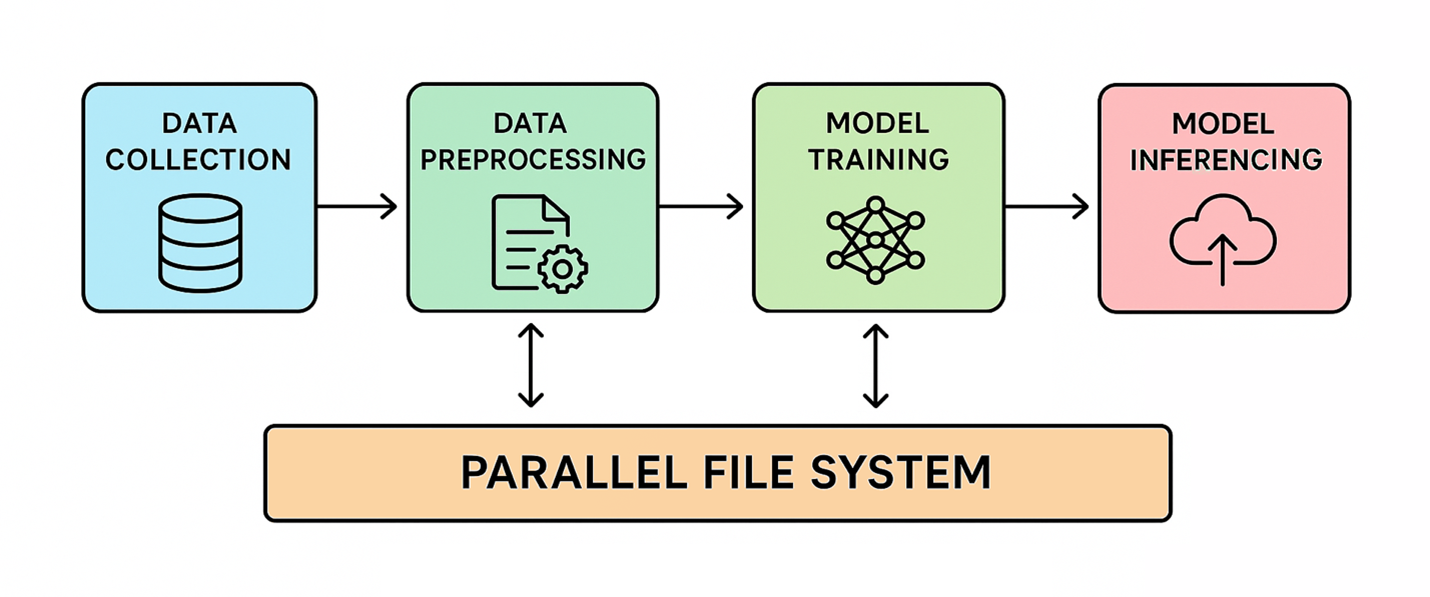

AI workflows (supply: VDURA)

Mannequin Inference

As soon as skilled, the AI mannequin could make predictions on new, fairly than historic, information by making use of the patterns it has realized to generate actionable outputs. For instance, in case you present the mannequin an image of a canine it has by no means seen earlier than, it would predict: “That may be a canine.”

How Excessive-Efficiency File Storage is Affected

An HPC parallel file system breaks information into chunks and distributes them throughout a number of networked storage servers. This permits many compute nodes to entry the information concurrently at excessive speeds. Because of this, this structure has develop into important for data-intensive workloads, together with AI.

Throughout the information ingestion section, uncooked information comes from many sources, and parallel file techniques might play a restricted position. Their significance will increase throughout preprocessing and mannequin coaching, the place high-throughput techniques are wanted to rapidly load and remodel giant datasets. This reduces the time required to organize datasets for each coaching and inference.

Checkpointing throughout mannequin coaching periodically saves the present state of the mannequin to guard towards progress loss on account of interruptions. This course of requires all nodes to save lots of the mannequin’s state concurrently, demanding excessive peak storage throughput to maintain checkpointing time minimal. Inadequate storage efficiency throughout checkpointing can prolong coaching occasions and improve the chance of knowledge loss.

It’s evident that AI workloads are pushed by throughput, not IOPS. Coaching giant fashions requires streaming large sequential datasets, typically gigabytes to terabytes in measurement, into GPUs. The actual bottleneck is combination bandwidth (GB/s or TB/s), fairly than dealing with tens of millions of small, random I/O operations per second. Inefficient storage can create bottlenecks, leaving GPUs and different processors idle, slowing coaching, and driving up prices.

Necessities based mostly solely on IOPS can considerably inflate the storage finances or rule out essentially the most appropriate architectures. Parallel file techniques, alternatively, excel in throughput and scalability. To fulfill particular IOPS targets, manufacturing file techniques are sometimes over-engineered, including value or pointless capabilities, fairly than being designed for optimum throughput.

Conclusion

AI workloads demand high-throughput storage fairly than excessive IOPS. Whereas IOPS has lengthy been a normal metric, trendy AI — notably throughout information preprocessing, mannequin coaching, and checkpointing — depends on transferring large sequential datasets effectively to maintain GPUs and compute nodes absolutely utilized. Parallel file techniques present the mandatory scalability and bandwidth to deal with these workloads successfully, whereas focusing solely on IOPS can result in over-engineered, pricey options that don’t optimize coaching efficiency. For AI at scale, throughput and combination bandwidth are the true drivers of productiveness and price effectivity.

Writer: Petros Koutoupis has spent greater than 20 years within the information storage business, working for corporations which embrace Xyratex, Cleversafe/IBM, Seagate, Cray/HPE and, now, AI and HPC information platform firm VDURA.