A brand new safety vulnerability focusing on Amazon Machine Pictures (AMIs) has emerged, exposing organizations and customers to potential exploitation.

Dubbed the “whoAMI title confusion assault,” this flaw permits attackers to publish malicious digital machine photos beneath deceptive names, tricking unsuspecting customers into deploying them inside their Amazon Net Companies (AWS) infrastructure.

Understanding the AMI Vulnerability

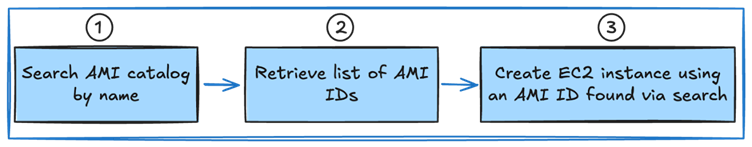

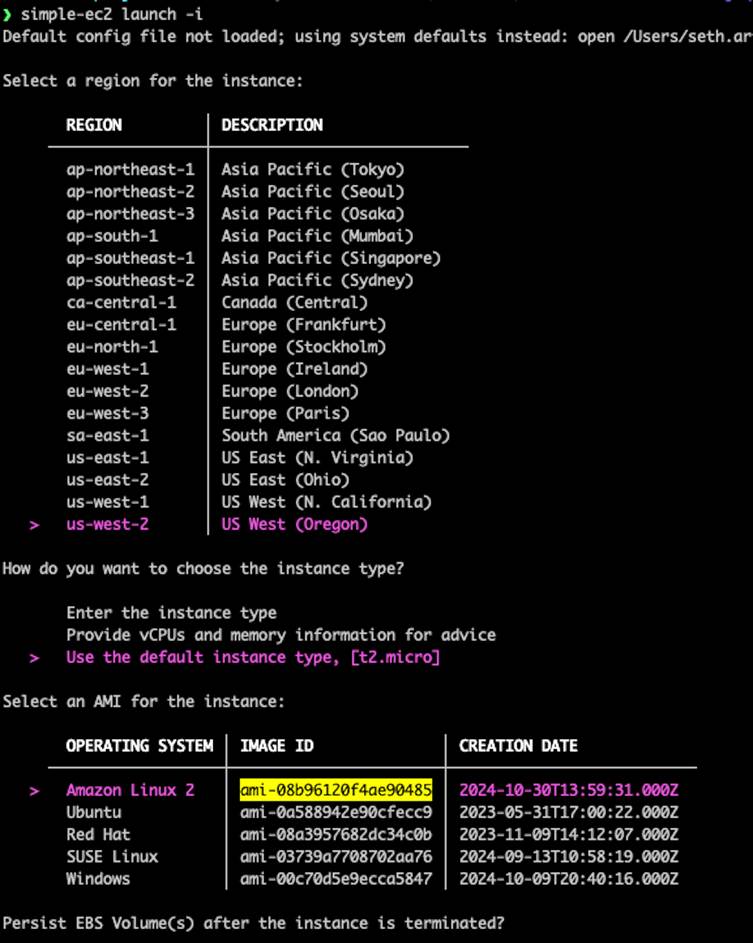

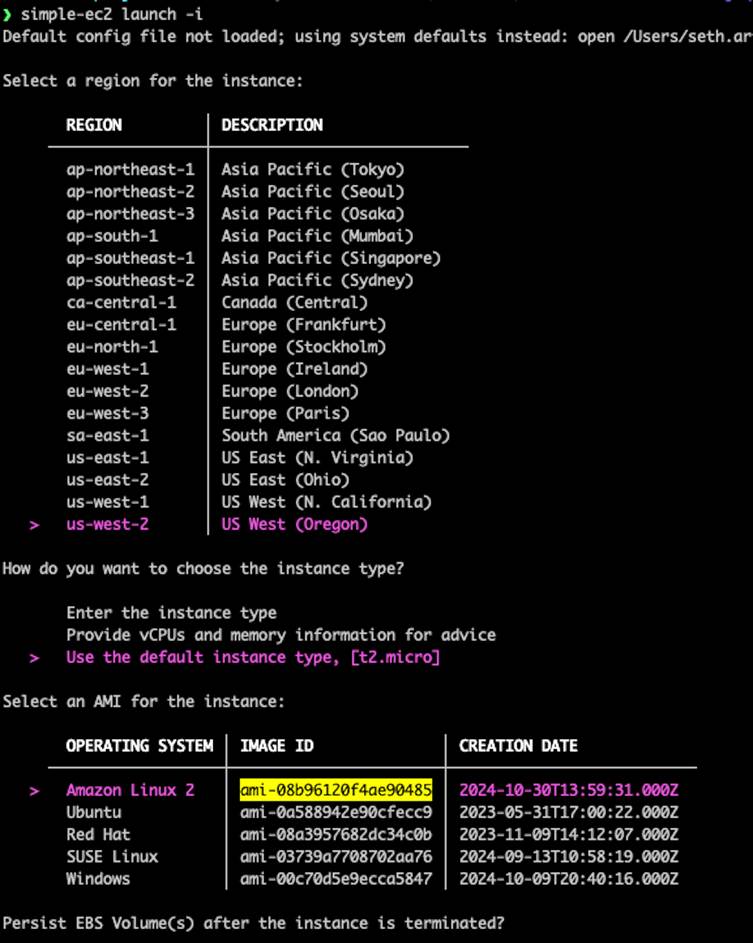

Amazon Machine Pictures (AMIs) are pre-configured digital machine templates used to launch EC2 cases in AWS.

Whereas AMIs could be personal, public, or bought by way of the AWS Market, customers typically depend on AWS’s search performance through the ec2:DescribeImages API to search out the newest AMIs for particular working programs or configurations.

Nonetheless, if customers or organizations fail to use particular safety measures, resembling specifying trusted “house owners” in the course of the AMI search course of, they might inadvertently use an unverified or malicious picture.

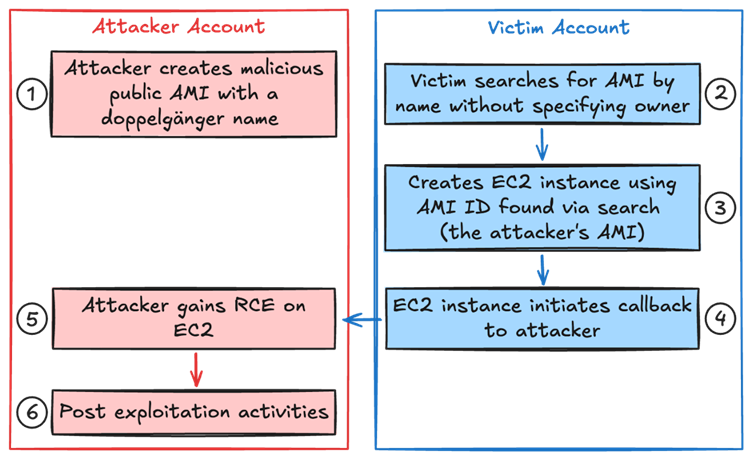

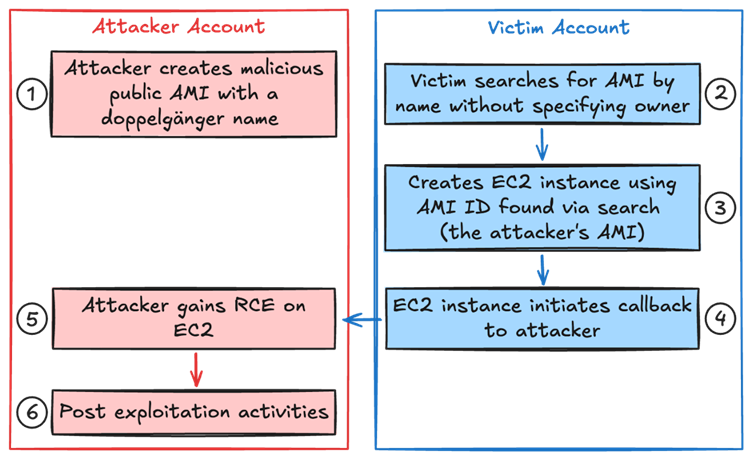

This vulnerability, categorised as a title confusion assault, exploits conditions the place organizations depend on AMI names or patterns with out verifying the picture’s supply or proprietor, as per a report by Knowledge Canine Safety Labs.

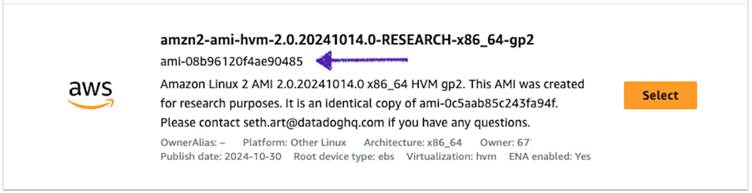

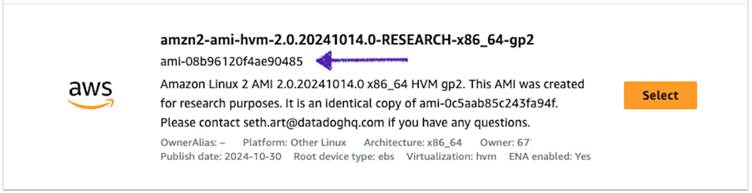

By publishing a malicious AMI with a reputation resembling reputable ones (e.g., matching patterns resembling “ubuntu/photos/hvm-ssd/ubuntu-focal-20.04-amd64-server-*”), attackers can guarantee their AMI seems because the “newest” in search outcomes.

As soon as deployed, these malicious AMIs can act as backdoors, exfiltrating delicate knowledge or enabling unauthorized entry to programs.

In a single reported occasion, researchers demonstrated the assault by making a malicious AMI named “ubuntu/photos/hvm-ssd/ubuntu-focal-20.04-amd64-server-whoAMI” that mimicked reputable assets.

This malicious AMI was efficiently retrieved and utilized by susceptible configurations.

Assault Mechanics and Exploitation

The vulnerability arises attributable to a misconfiguration in how AMI searches are carried out.

For instance, utilizing the next Terraform code for AMI retrieval may end up in vulnerabilities if the “house owners” attribute is omitted:

knowledge "aws_ami" "ubuntu" {

most_recent = true

filter {

title = "title"

values = ["ubuntu/images/hvm-ssd/ubuntu-focal-20.04-amd64-server-*"]

}

}This configuration leads to Terraform querying the ec2:DescribeImages API, returning an inventory of all AMIs matching the search standards—together with these from untrusted or malicious sources.

If the most_recent=true attribute is utilized, Terraform mechanically selects the latest AMI, which may very well be an attacker’s malicious useful resource.

Attackers can exploit this by publishing public AMIs with names that embrace key phrases like “amzn”, “ubuntu”, or different well-known patterns, guaranteeing that their AMI is chosen by automated or human-driven searches.

As soon as chosen, the malicious AMI can embrace backdoors, malware, or different dangerous components, making it a critical risk to cloud safety.

Mitigation and Prevention

- Use Proprietor Filters: At all times specify house owners when querying AMIs. Trusted values embrace amazon, aws-marketplace, or well-known AWS account IDs resembling 137112412989 (Amazon Linux).Instance of a safer AWS CLI question:

aws ec2 describe-images

--filters "Identify=title,Values=amzn2-ami-hvm-*-x86_64-gp2"

--owners "137112412989"- Undertake AWS’s Allowed AMIs Characteristic: Launched in December 2024, this function permits AWS prospects to create an allowlist of trusted AMI suppliers, guaranteeing that EC2 cases are solely launched utilizing photos from verified accounts.

- Replace Terraform and IaC Instruments: Guarantee you might be utilizing up to date variations of Terraform or different infrastructure-as-code instruments. Latest updates embrace warnings or errors for improper AMI searches (e.g., Terraform’s aws_ami supplier now alerts customers if most_recent=true is used with out specifying house owners).

- Carry out Code Audits: Make the most of instruments like Semgrep to seek for dangerous patterns in your codebase, together with Terraform, CLI scripts, and programming languages like Python, Go, and Java.Instance Semgrep rule to detect dangers:

guidelines:

- id: missing-owners-in-aws-ami

languages:

- terraform

patterns:

- sample: |

knowledge "aws_ami" $NAME {

...

most_recent = true

}

- pattern-not: |

house owners = $OWNERS- Monitor Present Situations: Use instruments just like the open-source whoAMI-scanner to audit your AWS accounts for cases launched from unverified AMIs. This instrument supplies a complete record of cases utilizing AMIs which might be public or non-allowlisted.

AWS has acknowledged the potential impression of this vulnerability and has labored with researchers to deal with it.

In response to their assertion, the affected programs inside AWS environments have been non-production and had no buyer knowledge publicity.

As well as, AWS launched the Allowed AMIs function to mitigate such dangers and inspired prospects to implement this guardrail.

The “whoAMI” vulnerability underscores the crucial want for safe configurations and due diligence when working in cloud environments.

Organizations should undertake safe practices, resembling validating AMI possession throughout searches and leveraging AWS’s new safety features.

With 1000’s of accounts probably affected, sustaining vigilance is crucial to defending delicate workloads and knowledge on AWS.

Examine Actual-World Malicious Hyperlinks & Phishing Assaults With Risk Intelligence Lookup - Strive for Free