[ad_1]

GenAI is in every single place — accessible as a standalone device, proprietary LLMs or embedded in purposes. Since everybody can simply entry it, it additionally presents safety and privateness dangers, so CISOs are doing what they will to remain up on it whereas defending their corporations with insurance policies.

“As a CISO who has to approve a company’s utilization of GenAI, I have to have a centralized governance framework in place,” says Sammy Basu CEO & founding father of cybersecurity resolution supplier Cautious Safety. “We have to educate workers about what info they will enter into AI instruments, and they need to chorus from importing consumer confidential or restricted info as a result of we don’t have readability on the place the information might wind up.”

Particularly, Basu created safety insurance policies and easy AI dos and don’ts addressing AI utilization for Cautious Safety shoppers. As is typical lately, persons are importing info into AI fashions to remain aggressive. Nevertheless, Basu says an everyday consumer would wish safety gateways constructed into their AI instruments to establish and redact delicate info. As well as, GenAI IP legal guidelines are ambiguous, so it’s not all the time clear who owns the copyright of AI generated content material that has been altered by a human.

From Cautious Curiosity to Threat-Conscious Adoption

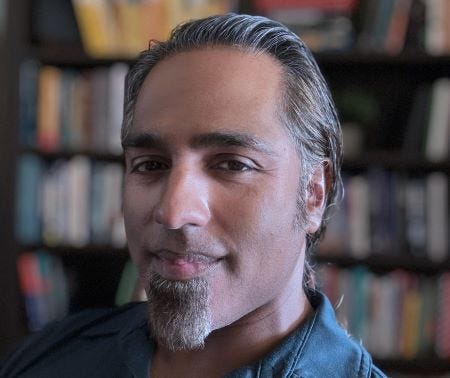

Ed Gaudet, CEO and founding father of healthcare threat administration resolution supplier Censinet says over time as a consumer and as a CISO, his GenAI expertise has transitioned from cautious curiosity to a extra structured, risk-aware adoption of GenAI capabilities.

“It’s plain that GenAI opens an unlimited array of alternatives, although cautious planning and steady studying stay crucial to comprise the dangers that it brings,” says Gaudet. “I used to be initially cautious about GenAI in the beginning due to the privateness of information, IP safety and misuse. Early variations of GenAI instruments, as an example, highlighted how enter knowledge was saved or used for additional coaching. However because the expertise has improved and suppliers have put higher safeguards in place — opt-out knowledge and safe APIs — I’ve come to see what it will possibly do when used responsibly.”

Gaudet believes delicate or proprietary knowledge ought to by no means be enter into GenAI methods, resembling OpenAI or proprietary LLMs. He has additionally made it obligatory for groups to make use of solely vetted and licensed instruments, ideally those who run on safe, on-premises environments to scale back knowledge publicity.

Ed Gaudet, Censinet

“One of many important challenges has been educating non-technical groups on these insurance policies,” says Gaudet. “GenAI is taken into account a ‘black field’ resolution by many customers, and they don’t all the time perceive all of the potential dangers related to knowledge leaks or the creation of misinformation.”

Patricia Thaine, co-founder and CEO at knowledge privateness resolution supplier Non-public AI, says curating knowledge for machine studying is difficult sufficient with out having to moreover take into consideration entry controls, function limitation, and the safety of private and confidential firm info going to 3rd events.

“This was by no means going to be a straightforward activity, regardless of when it occurred,” says Thaine. “The success of this gargantuan endeavor relies upon nearly completely on whether or not organizations can keep belief with correct AI governance in place and whether or not we now have lastly understood simply how essentially vital meticulous knowledge curation and high quality annotations are, no matter how giant a mannequin we throw at a activity.”

The Dangers Can Outweigh the Advantages

Extra employees are utilizing GenAI for brainstorming, producing content material, writing code, analysis, and evaluation. Whereas it has the potential to supply useful contributions to numerous workflows because it matures, an excessive amount of can go mistaken with out the correct safeguards.

“As a [CISO], I view this expertise as presenting extra dangers than advantages with out correct safeguards,” says Harold Rivas, CISO at international cybersecurity firm Trellix. “A number of corporations have poorly adopted the expertise within the hopes of selling their merchandise as progressive, however the expertise itself has continued to impress me with its staggeringly speedy evolution.”

Nevertheless, hallucinations can get in the way in which. Rivas recommends conducting experiments in managed environments and implementing guardrails for GenAI adoption. With out them, corporations can fall sufferer to high-profile cyber incidents like they did when first adopting cloud.

Dev Nag, CEO of help automation firm QueryPal, says he had preliminary, well-founded issues round knowledge privateness and management, however the panorama has matured considerably prior to now 12 months.

“The emergence of edge AI options, on-device inference capabilities, and personal LLM deployments has essentially modified our threat calculation. The place we as soon as had to decide on between performance and knowledge privateness, we are able to now deploy fashions that by no means ship delicate knowledge outdoors our management boundary,” says Nag. “We’re operating quantized open-source fashions inside our personal infrastructure, which provides us each predictable efficiency and full knowledge sovereignty.”

The requirements panorama has additionally advanced. The discharge of NIST’s AI Threat Administration Framework and concrete steerage from main cloud suppliers on AI governance, present clear frameworks to audit towards.

“We have carried out these controls inside our present safety structure, treating AI very similar to some other data-processing functionality that requires applicable safeguards. From a sensible standpoint, we’re now operating completely different AI workloads primarily based on knowledge sensitivity,” says Nag. “Public-facing capabilities may leverage cloud APIs with applicable controls, whereas delicate knowledge processing occurs solely on non-public infrastructure utilizing our personal fashions. This tiered method lets us maximize utility whereas sustaining strict management over delicate knowledge.”

Dev Nag, QueryPal

The rise of enterprise-grade AI platforms with SOC 2 compliance, non-public situations and no knowledge retention insurance policies has additionally expanded QueryPal’s choices for semi-sensitive workloads.

“When mixed with correct knowledge classification and entry controls, these platforms will be safely built-in into many enterprise processes. That stated, we keep rigorous monitoring and entry controls round all AI methods,” says Nag. “We deal with mannequin inputs and outputs as delicate knowledge streams that have to be tracked, logged and audited. Our incident response procedures particularly account for AI-related knowledge publicity eventualities, and we commonly check these procedures.”

GenAI Is Bettering Cybersecurity Detection and Response

Greg Notch, CIO at managed detection and response service supplier Expel, says GenAI’s capability to rapidly clarify what occurred throughout a safety incident to each SOC analysts and impacted events goes a great distance towards bettering effectivity and rising accountability within the SOC.

“[GenAI] is already proving to be a game-changer for safety operations,” says Notch. “As AI applied sciences flood the market, corporations face the twin problem of evaluating these instruments’ potential and managing dangers successfully. CISOs should minimize via the ‘noise’ of varied GenAI applied sciences to establish precise dangers and align safety applications accordingly investing important effort and time into crafting insurance policies, assessing new instruments and serving to the enterprise perceive tradeoffs. Plus, coaching cybersecurity groups to evaluate and use these instruments is important, albeit pricey. It is merely the price of doing enterprise with GenAI.”

Adopting AI instruments also can inadvertently shift an organization’s safety perimeter, making it essential to coach workers concerning the dangers of sharing delicate info with GenAI instruments each of their skilled and private lives. Clear acceptable use insurance policies or guardrails must be in place to information them.

“The actual game-changer is outcome-based planning,” says Notch. “Leaders ought to ask, ‘What outcomes do we have to help our enterprise targets? What safety investments are required to help these targets? And do these align with our funds constraints and enterprise targets? This may contain state of affairs planning, imagining the prices of potential knowledge loss, authorized prices and different unfavorable enterprise impacts in addition to prevention measures, to make sure budgets cowl each quick and future safety wants.”

State of affairs-based budgets assist organizations allocate assets thoughtfully and proactively, maximizing long-term worth from AI investments and minimizing waste. It’s about being ready, not panicked, he says.

“Concentrating on primary safety hygiene is one of the best ways to guard your group,” says Notch. “The No. 1 hazard is letting unfounded AI threats distract organizations from hardening their commonplace safety practices. Craft a plan for when an assault is profitable whether or not AI was an element or not. Having visibility and a method to remediate is essential for when, not if, an attacker succeeds.”

[ad_2]